Question 1

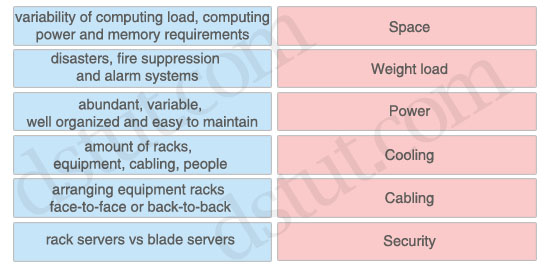

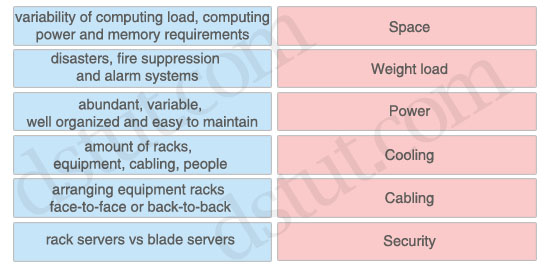

Drag the data center property on the left to the design aspect on the right it is most apt to affect

Answer:

Space: amount of racks, equipment, cabling, people

Weight load: rack servers vs blade servers

Power: variability of computing load, computing power and memory requirements

Cooling: arranging equipment racks face-to-face or back-to-back

Cabling: abundant, variable, well organized and easy to maintain

Security: disasters, fire suppression and alarm systems

Explanation

The data center space includes number of racks for equipment that will be installed. Other factor needs to be considered is the number of employees who will work in that data center.

Rack servers are low cost and provide high performance, unfortunately they take up space and consume a lot of energy to operate. Blade servers provide similar computing power when compared to rack mount servers, but require less space, power, and cabling. The chassis in most blade servers allows for shared power, Ethernet LAN, and Fibre Channel SAN connections, which reduce the number of cables needed.

The power in the data center facility is used to power cooling devices, servers, storage equipment, the network, and some lighting equipment. In server environments, the power usage depends on the computing load place on the server. For example, if the server needs to work harder by processing more data, it has to draw more AC power from the power supply, which in turn creates more heat that needs to be cooled down.

Cooling is used to control the temperature and humidity of the devices. The cabinets and racks should be arranged in the data center with an alternating pattern of “cold” and “hot” aisles. The cold aisle should have equipment arranged face to face, and the hot aisle should have equipment arranged back to back. In the cold aisle, there should be perforated floor tiles drawing cold air from the floor into the face of the equipment. This cold air passes through the equipment and flushes out the back into the hot aisle. The hot aisle does not have any perforated tiles, and this design prevents the hot air from mixing with the cold air.

The cabling in the data center is known as the passive infrastructure. Data center teams rely on a structured and well-organized cabling plant. It is important for cabling to be easy to maintain, abundant and capable of supporting various media types and requirements for proper data center operations.

Fire suppression and alarm systems are considered physical security and should be in place to protect equipment and data from natural disasters and theft.

Question 2

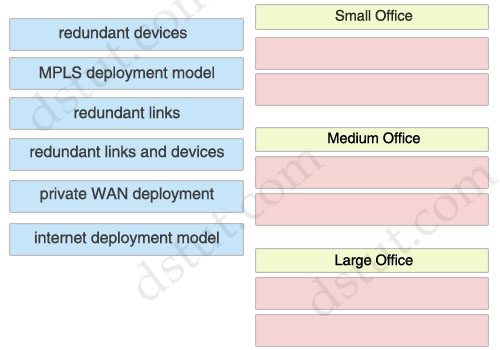

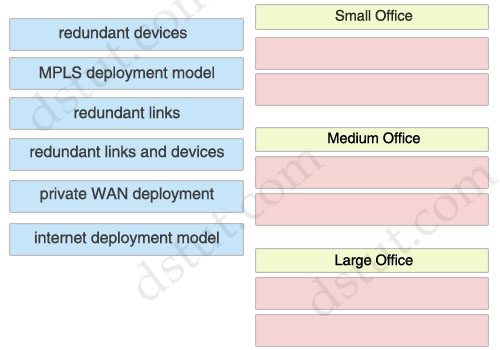

Drag the WAN characteristic on the left to the branch office model where it would most likely be used on the right

Answer:

Small Office:

+ redundant links

+ internet deployment model

Medium Office:

+ redundant devices

+ private WAN deployment

Large Office:

+ MPLS deployment model

+ redundant links and devices

Explanation

Small Office:

The small office is recommended for offices that have up to 50 users. The Layer 3 WAN services are based on the WAN and Internet deployment model. A T1 is used for the primary link, and an ADSL secondary link is used for backup.

Medium Office:

The medium branch design is recommended for branch offices of 50 to 100 users. Medium Offices often use redundancy gateway services like Hot Standby Router Protocol (HSRP) or Gateway Load Balancing Protocol (GLBP).

Private WAN generally consists of Frame Relay, ATM, private lines, and other traditional WAN connections. If security is needed, private WAN connections can be used in conjunction with encryption protocols such as Digital Encryption Standard (DES), Triple DES (3DES), and Advanced Encryption Standard (AES). This technology is best suited for an enterprise with moderate growth outlook where some remote or branch offices will need to be connected in the future.

Dual Frame Relay links in medium office provide the private WAN services, which are used to connect back to the corporate offices via both of the access routers.

Large Office:

The large office supports between 100 and 1000 users. The WAN services use an MPLS deployment model with dual WAN links into the WAN cloud -> MPLS & redundant links.

Question 3

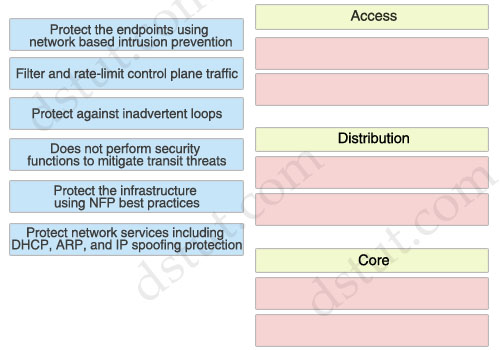

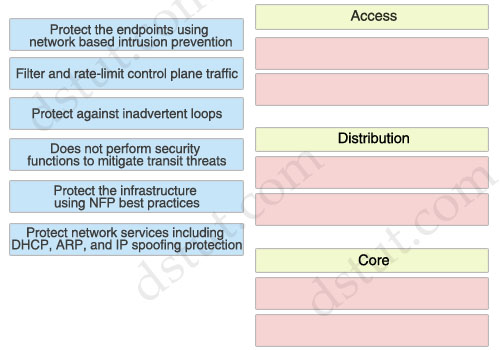

Drag the security provision on the left to the appropriate network module on the right

Answer:

Access:

+ Protect network services including DHCP, ARP, and IP spoofing protection

+ Protect against inadvertent loops

Distribution:

+ Protect the endpoints using network based intrusion prevention

+ Protect the infrastructure using NFP best practices

Core:

+ Does not perform security functions to mitigate transit threats

+ Filter and rate-limit control plane traffic

Explanation

Rate limiting controls the rate of bandwidth that incoming traffic is using, such as ARPs and DHCP requests.

Access layer:

Some security measures used for securing the campus access layer, including the following:

* Securing the endpoints using endpoint security software

* Securing the access infrastructure and protecting network services including DHCP, ARP, IP spoofing protection and protecting against inadvertent loops using Network Foundation Protection (NFP) best practices and Catalyst Integrated Security Features (CISF).

Distribution layer:

Security measures used for securing the campus distribution layer including the following:

* Protecting the endpoints using network-based intrusion prevention

* Protection the infrastructure using NFP best practices

Core layer:

The primary role of security in the enterprise core module is to protect the core itself, not to apply policy to mitigate transit threats traversing through the core.

The following are the key areas of the Network Foundation Protection (NFP) baseline security best practices applicable to securing the enterprise core:

* Infrastructure device access—Implement dedicated management interfaces to the out-of-band (OOB) management network, limit the accessible ports and restrict the permitted communicators and the permitted methods of access, present legal notification, authenticate and authorize access using AAA, log and account for all access, and protect locally stored sensitive data (such as local passwords) from viewing and copying.

* Routing infrastructure—Authenticate routing neighbors, implement route filtering, use default passive interfaces, and log neighbor changes.

* Device resiliency and survivability—Disable unnecessary services, filter and rate-limit control-plane traffic, and implement redundancy.

* Network telemetry—Implement NTP to synchronize time to the same network clock; maintain device global and interface traffic statistics; maintain system status information (memory, CPU, and process); and log and collect system status, traffic statistics, and device access information.

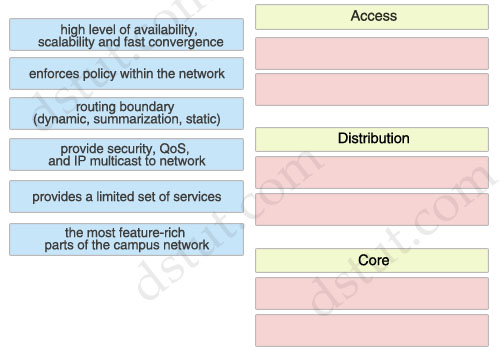

Question 4

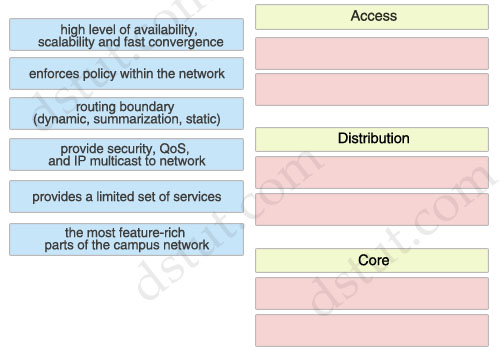

Drag the Campus Layer Design on the left to the appropriate location on the right

Answer:

Access:

+ routing boundary (dynamic, summarization, static)

+ the most feature-rich parts of the campus network

Distribution:

+ enforces policy within the network

+ provide security, QoS, and IP multicast to network

Core:

+ high level of availability, scalability and fast convergence

+ provides a limited set of services

Explanation

Campus Access Layer Network Design

The access layer is the first tier or edge of the campus, where end devices such as PCs, printers, cameras, Cisco TelePresence, etc. attach to the wired portion of the campus network. The wide variety of possible types of devices that can connect and the various services and dynamic configuration mechanisms that are necessary make the access layer one of the most feature-rich parts of the campus network.

Campus Distribution Layer

The campus distribution layer provides connectivity to the enterprise core for clients in the campus access layer. It aggregates the links from the access switches and serves as an integration point for campus security services such as IPS and network policy enforcement.

Distribution layer switches perform network foundation technologies such as routing, quality of service (QoS), and security.

Core Layer

The core layer provides scalability, high availability, and fast convergence to the network. The core layer is the backbone for campus connectivity, and is the aggregation point for the other layers and modules in the Cisco Enterprise Campus Architecture. The core provides a high level of redundancy and can adapt to changes quickly. Core devices are most reliable when they can accommodate failures by rerouting traffic and can respond quickly to changes in the network topology. The core devices implement scalable protocols and technologies, alternate paths, and load balancing. The core layer helps in scalability during future growth.

The campus core is in some ways the simplest yet most critical part of the campus. It provides a very limited set of services and is designed to be highly available and operate in an “always-on” mode. In the modern business world, the core of the network must operate as a non-stop 7x24x365 service.

Note:

It is a difficult question! Some characteristics are present at more than one layer so it is difficult to classify correctly. For example, a Cisco site says:

“The campus distribution layer acts as a services and control boundary between the campus access layer and the enterprise core. It is an aggregation point for all of the access switches providing policy enforcement, access control, route and link aggregation, and the isolation demarcation point between the campus access layer and the rest of the network.”

It means that the “routing boundary” should belong to the Distribution Layer instead of Access Layer. But the Distribution Layer also “enforces policy within the network” & “provide security, QoS, and IP multicast to network”.

After a lot of research, I decide to put the “routing boundary” to the Access Layer because this feature seems to be at the border of Access & Distribution layers so we can choose either. The “provide security, QoS, and IP multicast to network” features mainly belong to the Distribution layer (the Official 640-864 CCDA mentions about QoS, Security filtering & Broadcast or multicast domain definition in the Distribution layer)

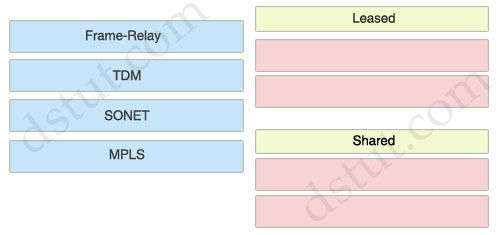

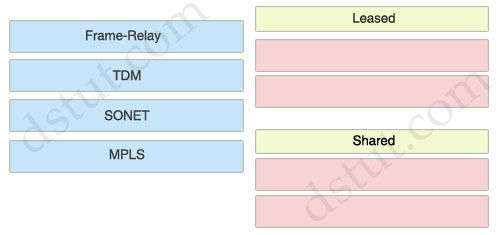

Question 5

Drag the WAN technology on the left to the most appropriate category on the right

Answer:

Leased:

+ TDM

+ SONET

Shared:

+ Frame-Relay

+ MPLS

Explanation

TDM & SONET are circuit-based so they are leased-line while Frame-Relay & MPLS are shared-circuit or packet-switched WAN

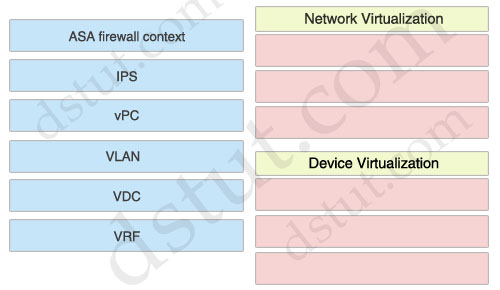

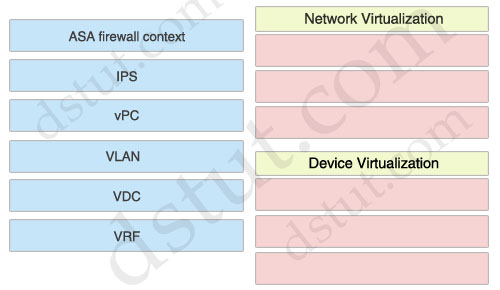

Question 6

Drag the technology on the left to the type of enterprise virtualization where it is most likely to be found on the right

Answer:

Network Virtualization:

+ VLAN

+ vPC

+ VRF

Device Virtualization:

+ ASA firewall context

+ IPS

+ VDC

Explanation

Network virtualization encompasses logical isolated network segments that share the same physical infrastructure. Each segment operates independently and is logically separate from the other segments. Each network segment appears with its own privacy, security, independent set of policies, QoS levels, and independent routing paths.

Here are some examples of network virtualization technologies:

* VLAN: Virtual local-area network

* VSAN: Virtual storage-area network

* VRF: Virtual routing and forwarding

* VPN: Virtual private network

* vPC: Virtual Port Channel

Device virtualization allows for a single physical device to act like multiple copies of itself. Device virtualization enables many logical devices to run independently of each other on the same physical piece of hardware. The software creates virtual hardware that can function just like the physical network device. Another form of device virtualization entails using multiple physical devices to act as one logical unit.

Here are some examples of device virtualization technologies:

* Server virtualization: Virtual machines (VM)

* Cisco Application Control Engine (ACE) context

* Virtual Switching System (VSS)

* Cisco Adaptive Security Appliance (ASA) firewall context

* Virtual device contexts (VDC)

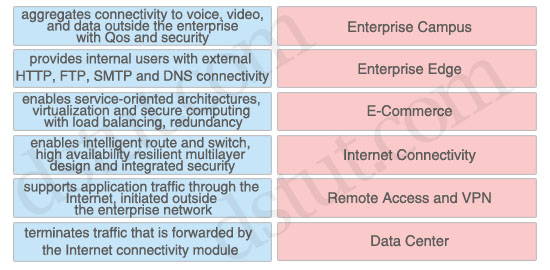

Question 7

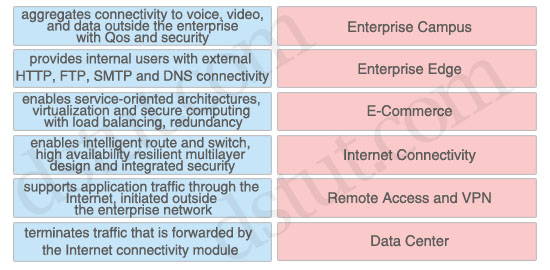

Drag the network function on the left to the functional area or module where it is most likely to be performed in the enterprise campus infrastructure on the right

Answer:

Enterprise Campus: enables intelligent route and switch, high availability resilient multilayer design and integrated security

Enterprise Edge: aggregates connectivity to voice, video, and data outside the enterprise with Qos and security

E-Commerce: supports application traffic through the Internet, initiated outside the enterprise network

Internet Connectivity: provides internal users with external HTTP, FTP, SMTP and DNS connectivity

Remote Access and VPN: terminates traffic that is forwarded by the Internet connectivity module

Data Center: enables service-oriented architectures, virtualization and secure computing with load balancing, redundancy

Access:

+ Protect network services including DHCP, ARP, and IP spoofing protection

+ Protect against inadvertent loops

Distribution:

+ Protect the endpoints using network based intrusion prevention

+ Protect the infrastructure using NFP best practices

Core:

+ Does not perform security functions to mitigate transit threats

+ Filter and rate-limit control plane traffic

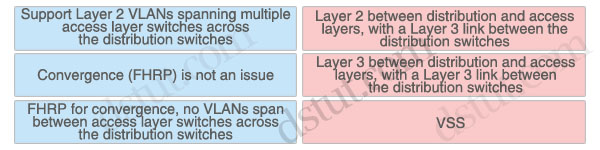

Question 8

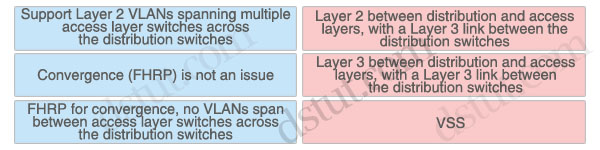

Drag the network characteristic on the left to the design method on the right which will best ensure redundancy at the building distribution layer

Answer:

Layer 2 between distribution and access layers, with a Layer 3 link between the distribution switches:

FHRP for convergence, no VLANs span between access layer switches across the distribution switches

Layer 3 between distribution and access layers, with a Layer 3 link between the distribution switches:

Support Layer 2 VLANs spanning multiple access layer switches across the distribution switches

VSS: Convergence (FHRP) is not an issue

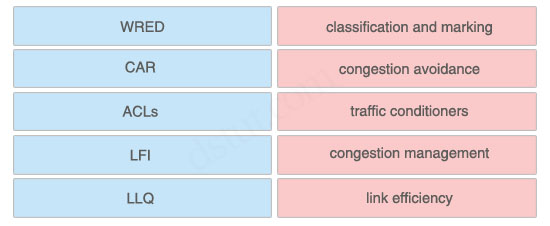

Question 9

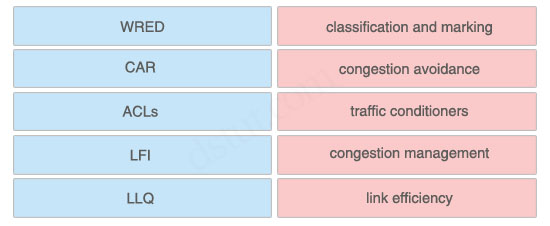

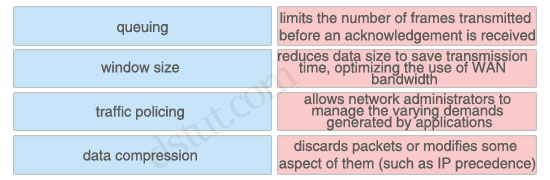

Click and drag the QoS feature type on the left to the category of QoS mechanism on the right.

Answer:

+ classification and marking: ACLs

+ congestion avoidance: WRED

+ traffic conditioners: CAR

+ congestion management: LLQ

+ link efficiency: LFI

Explanation

Classification is the process of partitioning traffic into multiple priority levels or classes of service. Information in the frame or packet header is inspected, and the frame’s priority is determined.Marking is the process of changing the priority or class of service (CoS) setting within a frame or packet to indicate its classification. Classification is usually performed with access control lists (ACL), QoS class maps, or route maps, using various match criteria.

Congestion-avoidance techniques monitor network traffic loads so that congestion can be anticipated and avoided before it becomes problematic. Congestion-avoidance techniques allow packets from streams identified as being eligible for early discard (those with lower priority) to be dropped when the queue is getting full. Congestion avoidance techniques provide preferential treatment for high priority traffic under congestion situations while maximizing network throughput and capacity utilization and minimizing packet loss and delay.

Weighted random early detection (WRED) is the Cisco implementation of the random early detection (RED) mechanism. WRED extends RED by using the IP Precedence bits in the IP packet header to determine which traffic should be dropped; the drop-selection process is weighted by the IP precedence.

Traffic conditioner consists of policing and shaping. Policing either discards the packet or modifies some aspect of it, such as its IP Precedence or CoS bits, when the policing agent determines that the packet meets a given criterion. In comparison, traffic shaping attempts to adjust the transmission rate of packets that match a certain criterion. Shaper typically delays excess traffic by using a buffer or queuing mechanism to hold packets and shape the ?ow when the source’s data rate is higher than expected. For example, generic traffic shaping uses a weighted fair queue to delay packets to shape the flow. Traffic conditioner is also referred to as Committed Access Rate (CAR).

Congestion management includes two separate processes: queuing, which separates traffic into various queues or buffers, and scheduling, which decides from which queue traffic is to be sent next. There are two types of queues: the hardware queue (also called the transmit queue or TxQ) and software queues. Software queues schedule packets into the hardware queue based on the QoS requirements and include the following types: weighted fair queuing (WFQ), priority queuing (PQ), custom queuing (CQ), class-based WFQ (CBWFQ), and low latency queuing (LLQ).

LLQ is also known as Priority Queuing–Class-Based Weighted Fair Queuing (PQ-CBWFQ). LLQ provides a single priority but it’s preferred for VoIP networks because it can also configure guaranteed bandwidth for different classes of traffic queue. For example, all voice call traffic would be assigned to the priority queue, VoIP signaling and video would be assigned to a traffic class, FTP traffic would be assigned to a low-priority traffic class, and all other traffic would

be assigned to a regular class.

Link efficiency techniques, including link fragmentation and interleaving (LFI) and compression. LFI prevents small voice packets from being queued behind large data packets, which could lead to unacceptable delays on low-speed links. With LFI, the voice gateway fragments large packets into smaller equal-sized frames and interleaves them with small voice packets so that a voice packet does not have to wait until the entire large data packet is sent. LFI reduces and ensures a more predictable voice delay.

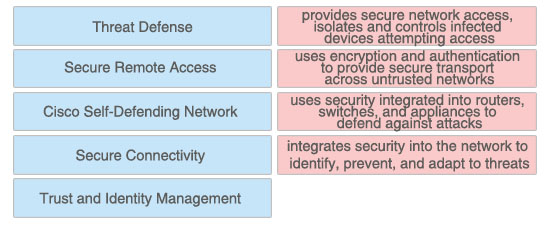

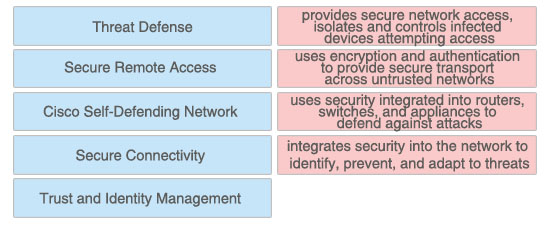

Question 10

Click and drag the Cisco Self-Defending Network term on the left to the SDN description on the right. Not all terms will be used.

Answer:

+ provides secure network access, isolates and controls infected devices attempting access: Trust and Identity Management

+ uses encryption and authentication to provide secure transport across untrusted networks: Secure Connectivity

+ uses security integrated into routers, switches, and appliances to defend against attacks: Threat Defense

+ integrates security into the network to identify, prevent, and adapt to threats: Cisco Self-Defending Network

Explanation

Trust and identity management solutions provide secure network access and admission at any point in the network and isolate and control infected or unpatched devices that attempt to access the network. If you are trusted, you are granted access.

We can understand “trust” is the security policy applied on two or more network entities and allows them to communicate or not in a specific circumstance. “Identity” is the “who” of a trust relationship.

The main purpose of Secure Connectivity is to protect the integrity and privacy of the information and it is mostly done by encryption and authentication. The purpose of encryption is to guarantee confidentiality; only authorized entities can encrypt and decrypt data. Authentication is used to establish the subject’s identity. For example, the users are required to provide username and password to access a resource…

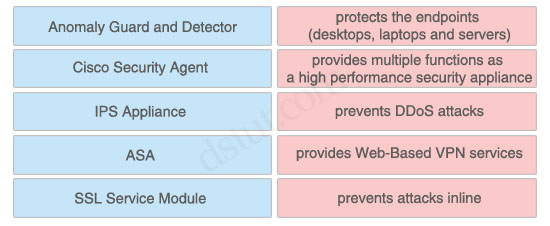

Question 11

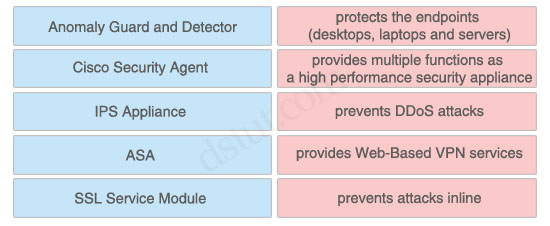

Match the Cisco security solution on the left to its function on the right.

Answer:

+ protects the endpoints (desktops, laptops and servers): Cisco Security Agent

+ provides multiple functions as a high performance security appliance: ASA

+ prevents DDoS attacks: Anomaly Guard and Detector

+ provides Web-Based VPN services: SSL Service Module

+ prevents attacks inline: IPS Appliance

Question 12

Answer:

Question 13

Answer:

Step 1: Prepare

Step 2: Plan

Step 3: Design

Step 4: Implement

Step 5: Operate

Step 6: Optimize

Question 14

Answer:

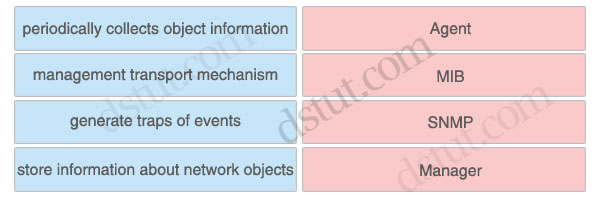

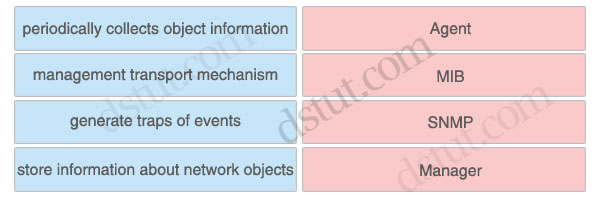

Agent: generate traps of events

MIB: store information about network objects

SNMP: management transport mechanism

Manager: periodically collects object information

Explanation

The SNMP system consists of three parts: SNMP manager, SNMP agent, and MIB.

The agent is the network management software that resides in the managed device. The agent gathers the information and puts it in SNMP format. It responds to the manager’s request for information and also generates traps.

A Management Information Base (MIB) is a collection of information that is stored on the local agent of the managed device. MIBs are databases of objects organized in a tree-like structure, with each branch containing similar objects.

Drag the data center property on the left to the design aspect on the right it is most apt to affect

Answer:

Space: amount of racks, equipment, cabling, people

Weight load: rack servers vs blade servers

Power: variability of computing load, computing power and memory requirements

Cooling: arranging equipment racks face-to-face or back-to-back

Cabling: abundant, variable, well organized and easy to maintain

Security: disasters, fire suppression and alarm systems

Explanation

The data center space includes number of racks for equipment that will be installed. Other factor needs to be considered is the number of employees who will work in that data center.

Rack servers are low cost and provide high performance, unfortunately they take up space and consume a lot of energy to operate. Blade servers provide similar computing power when compared to rack mount servers, but require less space, power, and cabling. The chassis in most blade servers allows for shared power, Ethernet LAN, and Fibre Channel SAN connections, which reduce the number of cables needed.

The power in the data center facility is used to power cooling devices, servers, storage equipment, the network, and some lighting equipment. In server environments, the power usage depends on the computing load place on the server. For example, if the server needs to work harder by processing more data, it has to draw more AC power from the power supply, which in turn creates more heat that needs to be cooled down.

Cooling is used to control the temperature and humidity of the devices. The cabinets and racks should be arranged in the data center with an alternating pattern of “cold” and “hot” aisles. The cold aisle should have equipment arranged face to face, and the hot aisle should have equipment arranged back to back. In the cold aisle, there should be perforated floor tiles drawing cold air from the floor into the face of the equipment. This cold air passes through the equipment and flushes out the back into the hot aisle. The hot aisle does not have any perforated tiles, and this design prevents the hot air from mixing with the cold air.

The cabling in the data center is known as the passive infrastructure. Data center teams rely on a structured and well-organized cabling plant. It is important for cabling to be easy to maintain, abundant and capable of supporting various media types and requirements for proper data center operations.

Fire suppression and alarm systems are considered physical security and should be in place to protect equipment and data from natural disasters and theft.

Question 2

Drag the WAN characteristic on the left to the branch office model where it would most likely be used on the right

Answer:

Small Office:

+ redundant links

+ internet deployment model

Medium Office:

+ redundant devices

+ private WAN deployment

Large Office:

+ MPLS deployment model

+ redundant links and devices

Explanation

Small Office:

The small office is recommended for offices that have up to 50 users. The Layer 3 WAN services are based on the WAN and Internet deployment model. A T1 is used for the primary link, and an ADSL secondary link is used for backup.

Medium Office:

The medium branch design is recommended for branch offices of 50 to 100 users. Medium Offices often use redundancy gateway services like Hot Standby Router Protocol (HSRP) or Gateway Load Balancing Protocol (GLBP).

Private WAN generally consists of Frame Relay, ATM, private lines, and other traditional WAN connections. If security is needed, private WAN connections can be used in conjunction with encryption protocols such as Digital Encryption Standard (DES), Triple DES (3DES), and Advanced Encryption Standard (AES). This technology is best suited for an enterprise with moderate growth outlook where some remote or branch offices will need to be connected in the future.

Dual Frame Relay links in medium office provide the private WAN services, which are used to connect back to the corporate offices via both of the access routers.

Large Office:

The large office supports between 100 and 1000 users. The WAN services use an MPLS deployment model with dual WAN links into the WAN cloud -> MPLS & redundant links.

Question 3

Drag the security provision on the left to the appropriate network module on the right

Answer:

Access:

+ Protect network services including DHCP, ARP, and IP spoofing protection

+ Protect against inadvertent loops

Distribution:

+ Protect the endpoints using network based intrusion prevention

+ Protect the infrastructure using NFP best practices

Core:

+ Does not perform security functions to mitigate transit threats

+ Filter and rate-limit control plane traffic

Explanation

Rate limiting controls the rate of bandwidth that incoming traffic is using, such as ARPs and DHCP requests.

Access layer:

Some security measures used for securing the campus access layer, including the following:

* Securing the endpoints using endpoint security software

* Securing the access infrastructure and protecting network services including DHCP, ARP, IP spoofing protection and protecting against inadvertent loops using Network Foundation Protection (NFP) best practices and Catalyst Integrated Security Features (CISF).

Distribution layer:

Security measures used for securing the campus distribution layer including the following:

* Protecting the endpoints using network-based intrusion prevention

* Protection the infrastructure using NFP best practices

Core layer:

The primary role of security in the enterprise core module is to protect the core itself, not to apply policy to mitigate transit threats traversing through the core.

The following are the key areas of the Network Foundation Protection (NFP) baseline security best practices applicable to securing the enterprise core:

* Infrastructure device access—Implement dedicated management interfaces to the out-of-band (OOB) management network, limit the accessible ports and restrict the permitted communicators and the permitted methods of access, present legal notification, authenticate and authorize access using AAA, log and account for all access, and protect locally stored sensitive data (such as local passwords) from viewing and copying.

* Routing infrastructure—Authenticate routing neighbors, implement route filtering, use default passive interfaces, and log neighbor changes.

* Device resiliency and survivability—Disable unnecessary services, filter and rate-limit control-plane traffic, and implement redundancy.

* Network telemetry—Implement NTP to synchronize time to the same network clock; maintain device global and interface traffic statistics; maintain system status information (memory, CPU, and process); and log and collect system status, traffic statistics, and device access information.

Question 4

Drag the Campus Layer Design on the left to the appropriate location on the right

Answer:

Access:

+ routing boundary (dynamic, summarization, static)

+ the most feature-rich parts of the campus network

Distribution:

+ enforces policy within the network

+ provide security, QoS, and IP multicast to network

Core:

+ high level of availability, scalability and fast convergence

+ provides a limited set of services

Explanation

Campus Access Layer Network Design

The access layer is the first tier or edge of the campus, where end devices such as PCs, printers, cameras, Cisco TelePresence, etc. attach to the wired portion of the campus network. The wide variety of possible types of devices that can connect and the various services and dynamic configuration mechanisms that are necessary make the access layer one of the most feature-rich parts of the campus network.

Campus Distribution Layer

The campus distribution layer provides connectivity to the enterprise core for clients in the campus access layer. It aggregates the links from the access switches and serves as an integration point for campus security services such as IPS and network policy enforcement.

Distribution layer switches perform network foundation technologies such as routing, quality of service (QoS), and security.

Core Layer

The core layer provides scalability, high availability, and fast convergence to the network. The core layer is the backbone for campus connectivity, and is the aggregation point for the other layers and modules in the Cisco Enterprise Campus Architecture. The core provides a high level of redundancy and can adapt to changes quickly. Core devices are most reliable when they can accommodate failures by rerouting traffic and can respond quickly to changes in the network topology. The core devices implement scalable protocols and technologies, alternate paths, and load balancing. The core layer helps in scalability during future growth.

The campus core is in some ways the simplest yet most critical part of the campus. It provides a very limited set of services and is designed to be highly available and operate in an “always-on” mode. In the modern business world, the core of the network must operate as a non-stop 7x24x365 service.

Note:

It is a difficult question! Some characteristics are present at more than one layer so it is difficult to classify correctly. For example, a Cisco site says:

“The campus distribution layer acts as a services and control boundary between the campus access layer and the enterprise core. It is an aggregation point for all of the access switches providing policy enforcement, access control, route and link aggregation, and the isolation demarcation point between the campus access layer and the rest of the network.”

It means that the “routing boundary” should belong to the Distribution Layer instead of Access Layer. But the Distribution Layer also “enforces policy within the network” & “provide security, QoS, and IP multicast to network”.

After a lot of research, I decide to put the “routing boundary” to the Access Layer because this feature seems to be at the border of Access & Distribution layers so we can choose either. The “provide security, QoS, and IP multicast to network” features mainly belong to the Distribution layer (the Official 640-864 CCDA mentions about QoS, Security filtering & Broadcast or multicast domain definition in the Distribution layer)

Question 5

Drag the WAN technology on the left to the most appropriate category on the right

Answer:

Leased:

+ TDM

+ SONET

Shared:

+ Frame-Relay

+ MPLS

Explanation

TDM & SONET are circuit-based so they are leased-line while Frame-Relay & MPLS are shared-circuit or packet-switched WAN

Question 6

Drag the technology on the left to the type of enterprise virtualization where it is most likely to be found on the right

Answer:

Network Virtualization:

+ VLAN

+ vPC

+ VRF

Device Virtualization:

+ ASA firewall context

+ IPS

+ VDC

Explanation

Network virtualization encompasses logical isolated network segments that share the same physical infrastructure. Each segment operates independently and is logically separate from the other segments. Each network segment appears with its own privacy, security, independent set of policies, QoS levels, and independent routing paths.

Here are some examples of network virtualization technologies:

* VLAN: Virtual local-area network

* VSAN: Virtual storage-area network

* VRF: Virtual routing and forwarding

* VPN: Virtual private network

* vPC: Virtual Port Channel

Device virtualization allows for a single physical device to act like multiple copies of itself. Device virtualization enables many logical devices to run independently of each other on the same physical piece of hardware. The software creates virtual hardware that can function just like the physical network device. Another form of device virtualization entails using multiple physical devices to act as one logical unit.

Here are some examples of device virtualization technologies:

* Server virtualization: Virtual machines (VM)

* Cisco Application Control Engine (ACE) context

* Virtual Switching System (VSS)

* Cisco Adaptive Security Appliance (ASA) firewall context

* Virtual device contexts (VDC)

Question 7

Drag the network function on the left to the functional area or module where it is most likely to be performed in the enterprise campus infrastructure on the right

Answer:

Enterprise Campus: enables intelligent route and switch, high availability resilient multilayer design and integrated security

Enterprise Edge: aggregates connectivity to voice, video, and data outside the enterprise with Qos and security

E-Commerce: supports application traffic through the Internet, initiated outside the enterprise network

Internet Connectivity: provides internal users with external HTTP, FTP, SMTP and DNS connectivity

Remote Access and VPN: terminates traffic that is forwarded by the Internet connectivity module

Data Center: enables service-oriented architectures, virtualization and secure computing with load balancing, redundancy

Access:

+ Protect network services including DHCP, ARP, and IP spoofing protection

+ Protect against inadvertent loops

Distribution:

+ Protect the endpoints using network based intrusion prevention

+ Protect the infrastructure using NFP best practices

Core:

+ Does not perform security functions to mitigate transit threats

+ Filter and rate-limit control plane traffic

Question 8

Drag the network characteristic on the left to the design method on the right which will best ensure redundancy at the building distribution layer

Answer:

Layer 2 between distribution and access layers, with a Layer 3 link between the distribution switches:

FHRP for convergence, no VLANs span between access layer switches across the distribution switches

Layer 3 between distribution and access layers, with a Layer 3 link between the distribution switches:

Support Layer 2 VLANs spanning multiple access layer switches across the distribution switches

VSS: Convergence (FHRP) is not an issue

Question 9

Click and drag the QoS feature type on the left to the category of QoS mechanism on the right.

Answer:

+ classification and marking: ACLs

+ congestion avoidance: WRED

+ traffic conditioners: CAR

+ congestion management: LLQ

+ link efficiency: LFI

Explanation

Classification is the process of partitioning traffic into multiple priority levels or classes of service. Information in the frame or packet header is inspected, and the frame’s priority is determined.Marking is the process of changing the priority or class of service (CoS) setting within a frame or packet to indicate its classification. Classification is usually performed with access control lists (ACL), QoS class maps, or route maps, using various match criteria.

Congestion-avoidance techniques monitor network traffic loads so that congestion can be anticipated and avoided before it becomes problematic. Congestion-avoidance techniques allow packets from streams identified as being eligible for early discard (those with lower priority) to be dropped when the queue is getting full. Congestion avoidance techniques provide preferential treatment for high priority traffic under congestion situations while maximizing network throughput and capacity utilization and minimizing packet loss and delay.

Weighted random early detection (WRED) is the Cisco implementation of the random early detection (RED) mechanism. WRED extends RED by using the IP Precedence bits in the IP packet header to determine which traffic should be dropped; the drop-selection process is weighted by the IP precedence.

Traffic conditioner consists of policing and shaping. Policing either discards the packet or modifies some aspect of it, such as its IP Precedence or CoS bits, when the policing agent determines that the packet meets a given criterion. In comparison, traffic shaping attempts to adjust the transmission rate of packets that match a certain criterion. Shaper typically delays excess traffic by using a buffer or queuing mechanism to hold packets and shape the ?ow when the source’s data rate is higher than expected. For example, generic traffic shaping uses a weighted fair queue to delay packets to shape the flow. Traffic conditioner is also referred to as Committed Access Rate (CAR).

Congestion management includes two separate processes: queuing, which separates traffic into various queues or buffers, and scheduling, which decides from which queue traffic is to be sent next. There are two types of queues: the hardware queue (also called the transmit queue or TxQ) and software queues. Software queues schedule packets into the hardware queue based on the QoS requirements and include the following types: weighted fair queuing (WFQ), priority queuing (PQ), custom queuing (CQ), class-based WFQ (CBWFQ), and low latency queuing (LLQ).

LLQ is also known as Priority Queuing–Class-Based Weighted Fair Queuing (PQ-CBWFQ). LLQ provides a single priority but it’s preferred for VoIP networks because it can also configure guaranteed bandwidth for different classes of traffic queue. For example, all voice call traffic would be assigned to the priority queue, VoIP signaling and video would be assigned to a traffic class, FTP traffic would be assigned to a low-priority traffic class, and all other traffic would

be assigned to a regular class.

Link efficiency techniques, including link fragmentation and interleaving (LFI) and compression. LFI prevents small voice packets from being queued behind large data packets, which could lead to unacceptable delays on low-speed links. With LFI, the voice gateway fragments large packets into smaller equal-sized frames and interleaves them with small voice packets so that a voice packet does not have to wait until the entire large data packet is sent. LFI reduces and ensures a more predictable voice delay.

Question 10

Click and drag the Cisco Self-Defending Network term on the left to the SDN description on the right. Not all terms will be used.

Answer:

+ provides secure network access, isolates and controls infected devices attempting access: Trust and Identity Management

+ uses encryption and authentication to provide secure transport across untrusted networks: Secure Connectivity

+ uses security integrated into routers, switches, and appliances to defend against attacks: Threat Defense

+ integrates security into the network to identify, prevent, and adapt to threats: Cisco Self-Defending Network

Explanation

Trust and identity management solutions provide secure network access and admission at any point in the network and isolate and control infected or unpatched devices that attempt to access the network. If you are trusted, you are granted access.

We can understand “trust” is the security policy applied on two or more network entities and allows them to communicate or not in a specific circumstance. “Identity” is the “who” of a trust relationship.

The main purpose of Secure Connectivity is to protect the integrity and privacy of the information and it is mostly done by encryption and authentication. The purpose of encryption is to guarantee confidentiality; only authorized entities can encrypt and decrypt data. Authentication is used to establish the subject’s identity. For example, the users are required to provide username and password to access a resource…

Question 11

Match the Cisco security solution on the left to its function on the right.

Answer:

+ protects the endpoints (desktops, laptops and servers): Cisco Security Agent

+ provides multiple functions as a high performance security appliance: ASA

+ prevents DDoS attacks: Anomaly Guard and Detector

+ provides Web-Based VPN services: SSL Service Module

+ prevents attacks inline: IPS Appliance

Question 12

Answer:

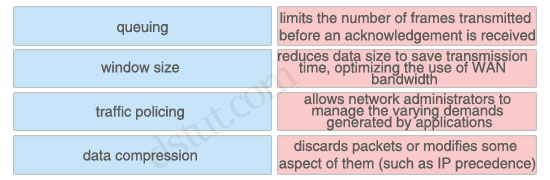

limits the number of frames transmitted before an acknowledgement is received: window size

reduces data size to save transmission time, optimizing the use of WAN bandwidth: data compression

allows network administrators to manage the varying demands generated by applications: queuing

discards packets or modifies some aspect of them (such as IP precedence): traffic policing

reduces data size to save transmission time, optimizing the use of WAN bandwidth: data compression

allows network administrators to manage the varying demands generated by applications: queuing

discards packets or modifies some aspect of them (such as IP precedence): traffic policing

Question 13

Place the PPDIOO Methodology in the correct order

| Optimize | Step 1 |

| Design | Step 2 |

| Prepare | Step 3 |

| Implement | Step 4 |

| Operate | Step 5 |

| Plan | Step 6 |

Answer:

Step 1: Prepare

Step 2: Plan

Step 3: Design

Step 4: Implement

Step 5: Operate

Step 6: Optimize

Question 14

Answer:

Agent: generate traps of events

MIB: store information about network objects

SNMP: management transport mechanism

Manager: periodically collects object information

Explanation

The SNMP system consists of three parts: SNMP manager, SNMP agent, and MIB.

The agent is the network management software that resides in the managed device. The agent gathers the information and puts it in SNMP format. It responds to the manager’s request for information and also generates traps.

A Management Information Base (MIB) is a collection of information that is stored on the local agent of the managed device. MIBs are databases of objects organized in a tree-like structure, with each branch containing similar objects.